We are excited to announce the second round of the Open Humanities Awards. *The deadline for submissions to the awards has been extended to Friday 6 June 2014.*

There are €20,000 worth of prizes on offer in two dedicated tracks:

-

Open track: for projects that either use open content, open data or open source tools to further humanities teaching and research

-

DM2E track: for projects that build upon the research, tools and data of the DM2E project

Whether you’re interested in patterns of allusion in Aristotle, networks of correspondence in the Jewish Enlightenment or digitising public domain editions of Dante, we’d love to hear about the kinds of open projects that could support your interest!

Why are we running these Awards?

Humanities research is based on the interpretation and analysis of a wide variety of cultural artefacts including texts, images and audiovisual material. Much of this material is now freely and openly available on the internet enabling people to discover, connect and contextualise cultural artefacts in ways previously very difficult.

We want to make the most of this new opportunity by encouraging budding developers and humanities researchers to collaborate and start new projects that use this open content and data paving the way for a vibrant cultural and research commons to emerge.

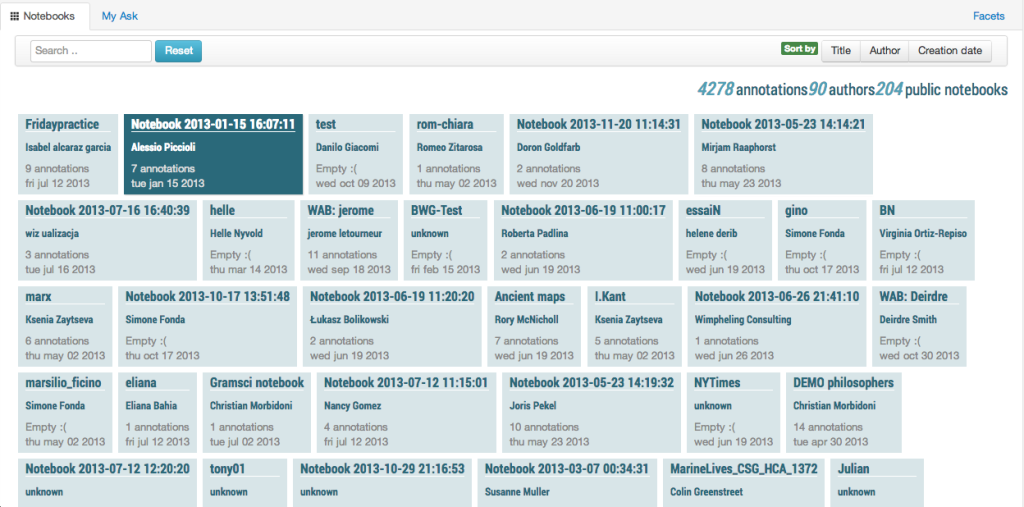

In addition, DM2E has developed tools to support Digital Humanities research, such as Pundit (a semantic web annotation tool), and delivered several interesting datasets from various content providers around Europe. The project is now inviting all researchers to submit a project building on this DM2E research in a special DM2E track.

Who can apply?

The Awards are open to any citizen of the EU.

Who is judging the Awards?

The Awards will be judged by a stellar cast of leading Digital Humanists:

What do we want to see?

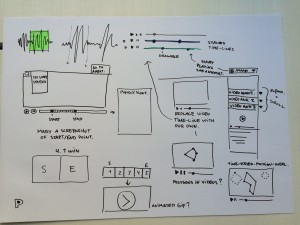

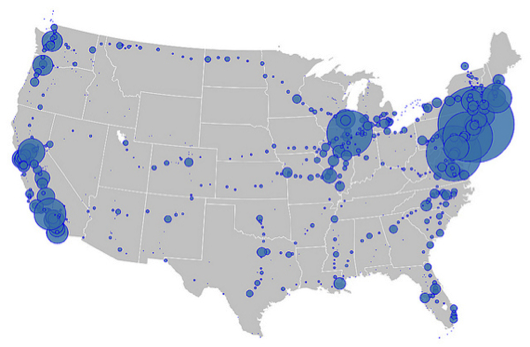

Maphub, an open source Web application for annotating digitized historical maps, was one of the winners of the first round of the Open Humanities awards

For the Open track, we are challenging humanities researchers, designers and developers to create innovative projects open content, open data or open source to further teaching or research in the humanities. For example you might want to:

-

Start a project to collaboratively transcribe, annotate, or translate public domain texts

-

Explore patterns of citation, allusion and influence using bibliographic metadata or textmining

-

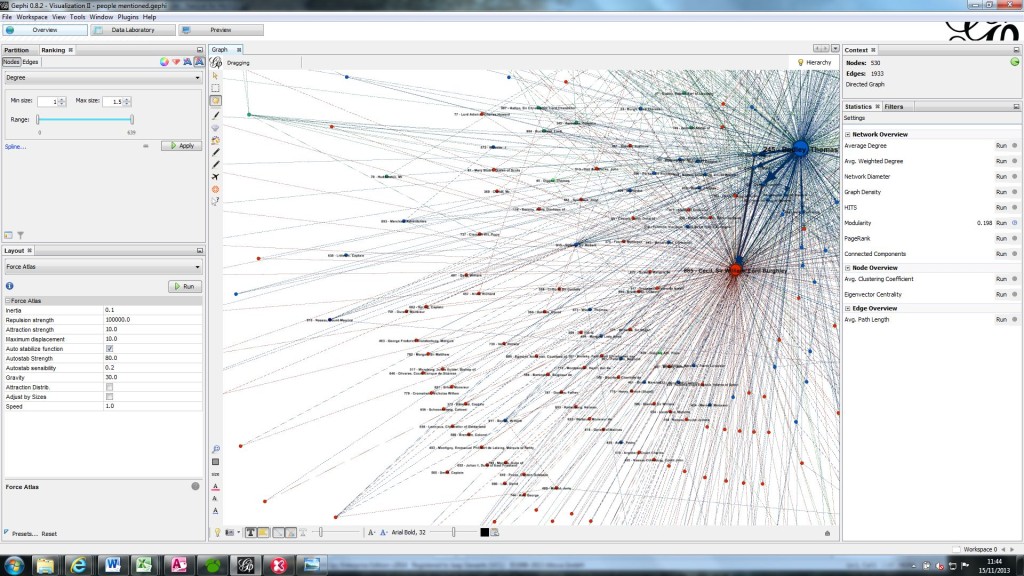

Analyse and/or visually represent complex networks or hidden patterns in collections of texts

-

Use computational tools to generate new insights into collections of public domain images, audio or texts

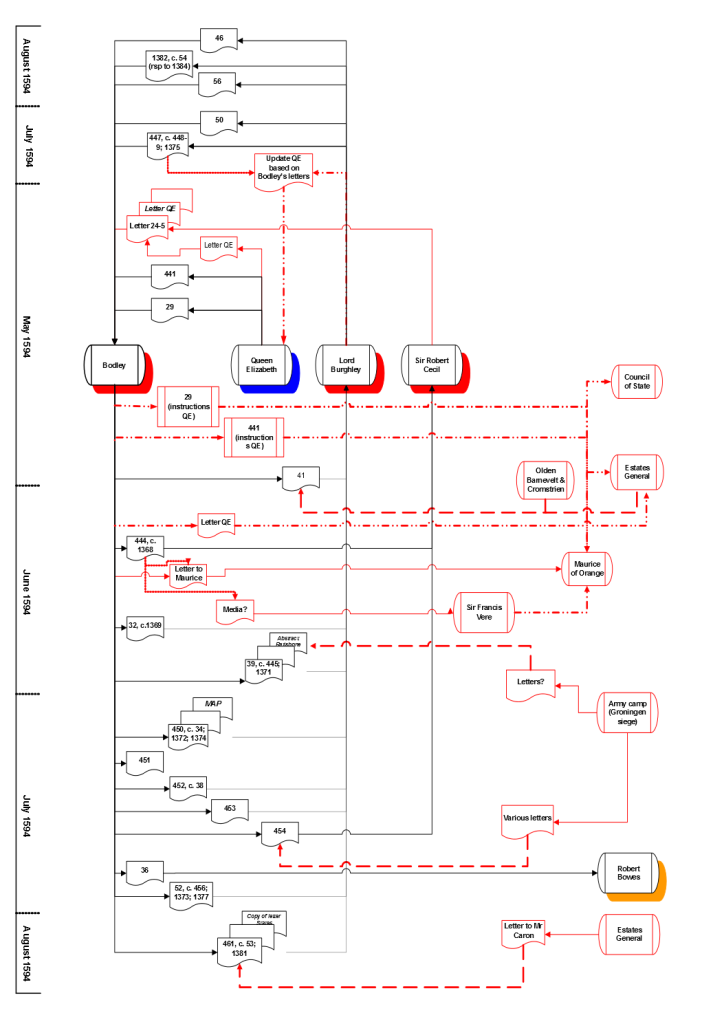

You could start a project from scratch or build on an existing project. For inspiration you can have a look at the final results of our first round winners: Joined Up Early Modern Diplomacy and Maphub, or check out the open-source tools the Open Knowledge Foundation has developed for use with cultural resources.

As long as your project involves open content, open data or open source tools and makes a contribution to humanities research, the choice is yours!

For the DM2E track, we invite you to submit a project building on the DM2E research: information, code and documentation on the DM2E tools is available through our DM2E wiki, the data is at http://data.dm2e.eu. Examples include:

-

Building open source tools or applications based on the API’s developed

-

A project focused on the visualisation of data coming from Pundit

-

A deployment of the tools for specific communities

-

A project using data aggregated by DM2E in an innovative way

-

An extension of the platform by means of a practical demonstrative application

Who is behind the awards?

The Awards are being coordinated by the Open Knowledge Foundation and are part of the DM2E project. They are also supported by the Digital Humanities Quarterly.

How to apply

Applications are open from today (30 April 2014). Go to openhumanitiesawards.org to apply. The application deadline has been extended to 6 June 2014, so get going and good luck!

More information…

For more information on the Awards including the rules and ideas for open datasets and tools to use visit openhumanitiesawards.org.