*This is the first in a series of posts from Dr Michael Piotrowski, one the recipients of the DM2E Open Humanities Awards – Open track.*

“Europäische Friedensverträge der Vormoderne online” (“Early Modern European Peace Treaties Online”) is a comprehensive collection of about 1,800 bilateral and multilateral European peace treaties from the period of 1450 to 1789, published as an open access resource by the Leibniz Institute of European History (IEG). Currently the metadata is stored in a relational database with a Web front-end. This project has two primary goals:

1. the publication of the treaties metadata as Linked Open Data, and

2. the evaluation of nanopublications as a representation format for humanities data.

You can find a longer description in the earlier blog post “Open Humanities Awards round 2 – winners announced”.

The project got off to a rocky start, as I had massive troubles finding someone to work on it. The IEG is a non-university research institute, so I do not have ready access to students—and in particular not to students with knowledge about Linked Open Data (LOD). I was about to give up, when Magnus Pfeffer of the Stuttgart Media University called to tell me he’d be interested to work on it with his team. He’s got lots of experience with LOD, so I’m very happy to have him work with me on the project.

We’ve now started to work on the first goal, the publication of the treaties metadata as LOD. This should be a relatively straightforward process, whereas the second goal, the evaluation of the nanopublications approach, will be more experimental—obviously, since nobody has used it in such a context yet.

The process for converting the content of the existing database into LOD basically consists of four steps:

1. Analyzing the data. The existing database consists of 11 tables and numerous fields. Some of the fields have telling names, but not all of them. Another question will be what the fields actually contain; it seems that sometimes creative solutions have been used. For example, the parties of a treaty are stored in a field declared as follows:

`partners` varchar(255) NOT NULL DEFAULT ”

This is a string field, but the field doesn’t contain the names of the parties, but rather their IDs, for example:

‘37,46,253’

You can then look up the names in another table and find out that 37 is France, 46 is Genoa, and 253 is Naples-Sicily. This is a workaround for the problem of storing lists of variable length, which is quite tedious in a relational database. While this approach is clearly better than hardcoding the names of the parties in every record, it moves a part of the semantics into the application, which has to know that what looks like a string is actually a list of keys for a table.

Now, this example is not particularly complicated, but it illustrates that a thorough analysis of the database is necessary in order to accurately extract and convert the information it contains.

2. Identifying and selecting pertinent ontologies. We don’t want to re-invent the wheel but rather want to build upon existing and proven ontologies for describing the treaties. One idea we’ve discussed is to model them as events; one could then use an ontology like LODE. However, we will first need to see what information we need to represent, i.e., what we find in the database.

3. Modelling the information in RDF. Once we know how to conceptually model the information, we need to define how to actually represent the information on a treaty in RDF.

4. Generating the data. Finally, we can then iterate over the database, extract the information, combine it into RDF statements, and output them in a form we can then import into a triple store.

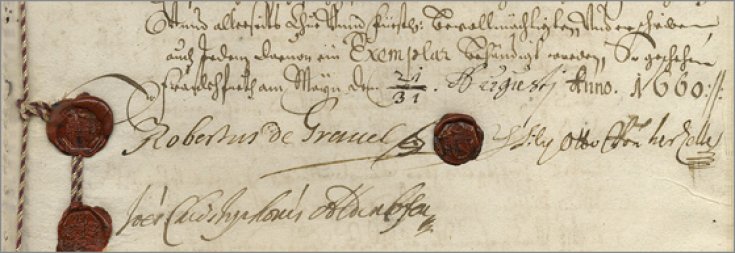

At this point, the basic data on the treaties will be available as LOD. However, some very interesting information is only available as unstructured text, for example the references to secondary literature or the names of the signees. At this point, we’ll probably get back to the database to see what additional information could be extracted—with reasonable effort—for inclusion.

Getting out the basic information should be straightforward, but, as always when dealing with legacy data, we may be in for some surprises…

Comments are closed.